5 Popular Application Server Setup Methods

Introduction

There are many factors to consider when deciding which server model to use for your application, such as performance, scalability, availability, reliability, cost, and manageability.

Here is a brief overview of some commonly used server setups, each with a brief explanation of its advantages and disadvantages to help provide a better understanding of web servers. Note that these methods can be combined, and each environment may have different requirements, so the presentation may not be entirely accurate.

1. All-in-One Server

All the software is placed on a single server. A typical application would include a web server, application server, and database server. A common variant of this setup is the LAMP stack, which stands for Linux, Apache, MySQL, and PHP, all on one server.

Use case: When you need to quickly set up an application, this is the most basic setup, but it’s difficult to scale and isolate different components of the application.

Advantages:

Simple.

Disadvantages:

The application and database share the server’s resources (CPU, memory, I/O…), so in addition to poor performance, identifying issues becomes more difficult.

Difficult to scale.

2. Separate Database Server

Database Management Systems (DBMS) are separated from the rest of the environment to eliminate resource contention between the application and the database, and it can improve security by placing the database in a private network.

Use case: Used to quickly set up an application, while avoiding the issue of the application and database sharing system resources.

Advantages:

The application and database do not compete for the same server resources (CPU, memory, I/O, etc.).

Can add resources to the server if scaling is needed.

Can enhance security by placing the database in a private network.

Disadvantages:

More complex setup than using a single server.

Performance issues may arise if there is significant latency between the two servers (e.g., geographically distant servers), or if the bandwidth is too low for the volume of data being transferred.

3. Load Balancer (Reverse Proxy)

A load balancer can be added to a server environment to improve performance and reliability by distributing request processing across multiple servers. If one of the load balancer servers fails, the other servers will handle traffic until the failed server comes back online. It can also be used to serve multiple applications through the same domain and port, using a Layer 7 (application layer) reverse proxy.

Examples of software with reverse proxy load balancing capabilities: HAProxy, Nginx, and Varnish.

Use case: Useful in an environment requiring scaling by adding more servers.

Advantages:

Can scale by adding more servers.

Can defend against DDOS attacks by limiting the number and frequency of user connections.

Disadvantages:

Load balancer can become a performance bottleneck if it doesn’t have enough resources or if it is misconfigured.

There may be issues regarding where SSL termination is done and how to handle session requirements.

The load balancer has a single point of failure—if the load balancer server fails, the entire system stops working.

4. HTTP Accelerator (Caching Reverse Proxy)

The time it takes to load content from the server to the user can be reduced through various techniques such as using an HTTP accelerator or caching HTTP reverse proxy. An HTTP accelerator stores the content returned from the application in memory, and when similar content is requested later, the HTTP Accelerator simply retrieves it from memory and returns it to the user without unnecessary interactions with the web server.

Examples of software that can accelerate HTTP: Varnish, Squid, Nginx.

Use case: Useful in an environment with dynamic web applications that have heavy content or frequently accessed resources.

Advantages:

Increases web performance by reducing CPU load on the web server through caching and compression, thereby increasing efficiency.

Can be used as a load balancer.

Some caching software can protect against DDOS attacks.

Disadvantages:

Requires tuning for optimal performance.

If the cache hit ratio is low, it could reduce performance.

5. Master-Slave Database Replication

One way to improve the performance of a system with a higher number of read operations than write operations, such as a CMS, is to use master-slave database replication. Master-slave replication requires a master node and one or more slave nodes. In this setup, data updates are sent to the master node, and read operations are distributed across all nodes.

Use case: Good for improving read performance from the application’s database.

Here is an example of setting up master-slave replication with one slave node:

Advantages:

Improves read performance by distributing reads across slave nodes.

Can improve performance by using the master node exclusively for writes (and almost never for reads).

Disadvantages:

The application needs a mechanism to determine which database nodes to use for writes and which to use for reads.

Data updates to slave nodes are asynchronous, so the data the application reads may not always be the most recent.

If the master node fails, data updates may be interrupted until the issue is resolved.

No failover mechanism for master node failures.

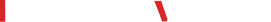

Example: Combining Everything

You can use a load balancer on a caching server outside the application server and use database replication in the same environment. The goal of this combination is to achieve optimal results with minimal difficulty. Here is an example model of a server environment:

Assume that the load balancer is configured to recognize requests for static resources (such as images, css, javascript…) and send those requests directly to the caching server, and send the remaining requests to the application server.

Here is a description of what happens when a user sends a request for dynamic content:

The user sends a request for dynamic resources to https://example.com/ (load balancer).

The load balancer forwards the request to app-backend.

The app-backend reads data from the database and returns the content to the load balancer.

The load balancer sends the content back to the user.

If the user requests static content:

The load balancer checks with cache-backend to see if the requested content is available (cache-hit) or not (cache-miss).

If cache-hit: return the requested content to the load balancer and move to step 7. If cache-miss: the cache server forwards the request to app-backend, via the load balancer.

The load balancer forwards the request to app-backend.

The app-backend reads data from the database and returns the requested content to the load balancer.

The load balancer forwards the content to cache-backend.

Cache-backend caches the content and returns it to the load balancer.

The load balancer returns the requested content to the user.

This environment still has two points of failure (load balancer and master database), but it provides all the reliability and performance benefits described in each section above.

Conclusion

Now that you are familiar with some basic server setups, you should have a good idea of which ones to use for your application. If you’re looking to improve your server’s performance, try the simpler methods first, then gradually move to the more complex ones.

By Mitchell Anicas – Digital Ocean